UK and EU at divergence over the future regulation of AI

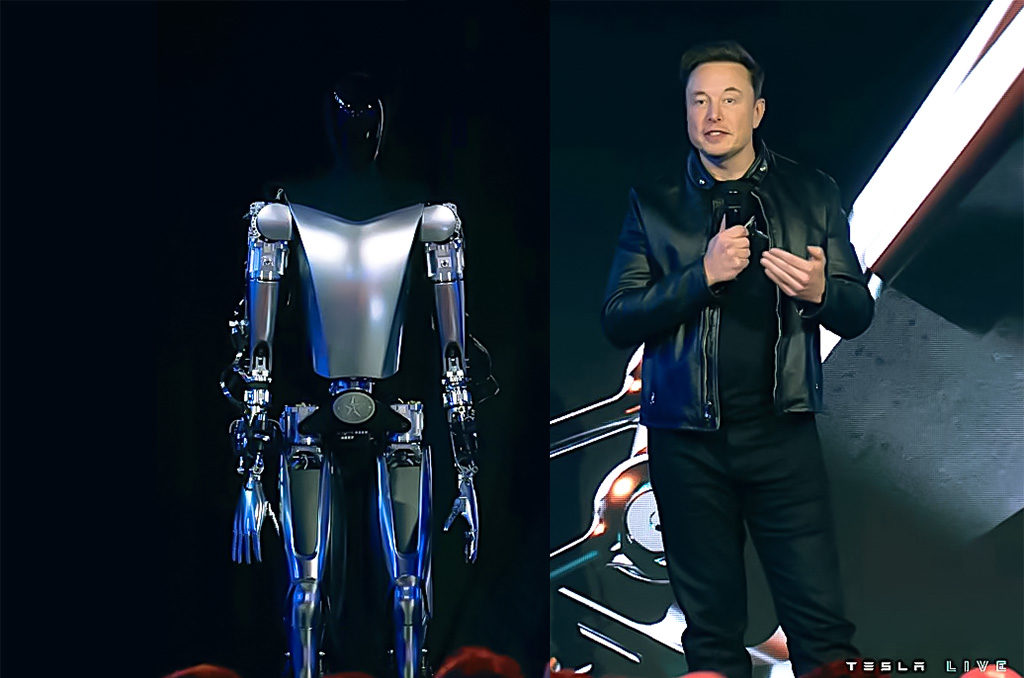

Elon Musk says an Optimus humanoid robot that Tesla is developing could be priced at less than $20,000 and wind up doing most of the work while people reap the benefits. – Copyright POOL/AFP/File Philip FONG

The UK is set to rethink its approach to AI legislation leading to a potential divergence in the UK and the EU’s legislative approach. What does this mean for the future development of artificial intelligence?

Looking into this matter is Scott Dawson, Head of Strategic Partnerships at DECTA, an end-to-end payments solutions provider, who examines for Digital Journal the implications of the regulatory difference.

Dawson begins by considering the purpose of legislation: “Ideally, the role of regulation should be to facilitate innovation, and the EU’s AI Act is a good example of regulation that has the potential to do just that.”

The Act assigns applications of AI to three risk categories:

- Applications and systems that create an unacceptable risk, such as government-run social scoring of the type used in China, are banned.

- High-risk applications, such as a CV-scanning tool that ranks job applicants, are subject to specific legal requirements.

- Applications not explicitly banned or listed as high-risk are largely left unregulated.

Adopting a risk framework means: “Classifying AI systems based on risk will allow fintech companies to benefit from the new capabilities of the technology while keeping a regulatory eye on the ‘black box’ problem. As AI models become more complex and opaque, their workings and reasoning are ever more difficult for any one human to understand.”

From the legislation there are some positive and negative aspects, which Dawson seeks to draw out: “The act emphasises the need for transparent AI, ensuring companies can explain how algorithms arrive at decisions. Naturally, there are considerations presented by this approach, but by creating a conceptual structure for firms to innovate within, the EU is creating a regulatory framework we can pre-emptively manage.”

In terms of the different path that the UK appears to be adopting, Dawson observes: “While the UK hasn’t enacted similar legislation, its “wait and see” approach poses challenges. Especially because fintech firms aiming for the EU market will need to comply with the Act’s requirements.”

Although this is potentially clearer than the European Union approach, there remains some room for improvement. Dawson says: “This includes increased transparency and robust due diligence for AI used in areas like credit scoring. Once again, an area where we need to understand why machines make the decisions that they do – and we’re not there yet.”

Dawson extends his assessment to make a recommendation: “During the UK’s AI Safety Summit in Bletchley Park last year, Rishi Sunak suggested the UK should be a leader in this space. However, it will need to make more decisive moves than just waiting and seeing if that is to be the case.”

In terms of the implications, Dawson thinks: If it does take the reins, regulating for the sake of innovation is very much an option. While the UK hasn’t adopted its own AI legislation, the EU Act’s influence is undeniable. UK’s fintech sector must adapt to the new standards to ensure continued access to the EU market and foster trust in AI-driven financial services.”

UK and EU at divergence over the future regulation of AI

#divergence #future #regulation