New app enables real-time, full-body motion capture using a smartphone

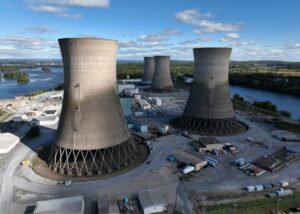

A researcher with the European Space Agency in Darmstadt, Germany, equipped with a VR headset and motion controllers. — Photo: ESA, via Wikipedia (CC BY-SA 3.0 IGO)

A new app has been demonstrated to perform effective real-time, full-body motion capture and 3D human translation with a smartphone. Researchers (Northwestern University engineers) unveiled the app at the ACM Symposium on User Interface Software and Technology (held in Pittsburgh, U.S.).

The app is called MobilePoser and it is designed to make motion capture technology more accessible. This is achieved through the use of the sensors already available in consumer devices (smartphones, smart watches and wireless earbuds). This means the app does not require specialized rooms, expensive equipment, bulky cameras or an array of sensors.

Through the sensors, MobilePoser accurately tracks a person’s full-body pose and global translation in space in real time.

At the heart of the technology are inertial measurement units (IMUs). This is a system that uses a combination of sensors — accelerometers, gyroscopes and magnetometers — to measure a body’s movement and orientation.

The app includes a custom-built, multi-stage artificial intelligence (AI) algorithm, which was trained using a publicly available, large dataset of synthesized IMU measurements generated from high-quality motion capture data. The AI algorithm estimates joint positions and joint rotations, walking speed and direction, and contact between the user’s feet and the ground.

By combining sensor data with machine-learning algorithms and physics, MobilePoser accurately tracks a person’s 3D position and movement in space in real time. The app could be used for activities including immersive gaming, health tracking and indoor navigation.

The reason the app is of interest is because of the limitations with equivalent technology. Other motion-sensing systems, like Microsoft Kinect, for example, rely on stationary cameras that view body movements. If a person is within the camera’s field of view, these systems work well. But they are impractical for mobile or on-the-go applications.

The resulting system has a tracking error of just 8 to 10 centimetres.

Northwestern’s Karan Ahuja states: “Running in real time on mobile devices, MobilePoser achieves state-of-the-art accuracy through advanced machine learning and physics-based optimization, unlocking new possibilities in gaming, fitness and indoor navigation without needing specialized equipment.”

Ahuja adds: “This technology marks a significant leap toward mobile motion capture, making immersive experiences more accessible and opening doors for innovative applications across various industries.”

An expert in human-computer interaction, Ahuja is the Lisa Wissner-Slivka and Benjamin Slivka Assistant Professor of Computer Science at Northwestern’s McCormick School of Engineering, where he directs the Sensing, Perception, Interactive Computing and Experience (SPICE) Lab.

New app enables real-time, full-body motion capture using a smartphone

#app #enables #realtime #fullbody #motion #capture #smartphone