AI summits and executive orders: Are politicians leaving it too late?

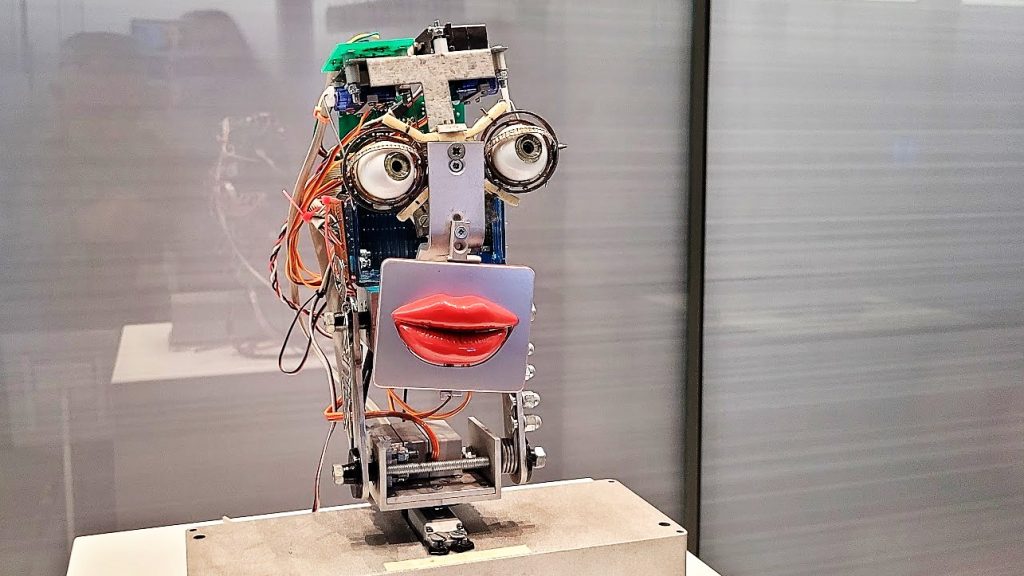

Representation of artificial intelligence at The Science Museum, London. Image (C) Tim Sandle

In the U.K., an AI safety summit is taking place at Bletchley Park – the home of Alan Turing’s innovative work. The event includes a keynote address by Rishi Sunak, setting out the benefits of advances in AI for the British economy.

The summit will address technologies like “frontier AI” models —the advanced large language models, or LLMs, like those developed by companies such as OpenAI, Anthropic, and Cohere.

This will lead on to key ethical questions like – How can these forms of AI be tested and monitored to ensure they do not cause harm?

At the event are some 100 world leaders, technology firm bosses and academics. At the end, the aim is to release a document called The Bletchley Declaration which will call for global cooperation on tackling the risks, which include potential breaches to privacy and the displacement of jobs.

Meanwhile, in the U.S. the White House has issued an Executive Order that establishes new standards for AI safety and security, protects the privacy of citizens, advances equity and civil rights, stands up for consumers and workers, promotes innovation and competition, advances U.S. global leadership.

In response to President Biden’s new executive order for AI, Stuart Wells, CTO of Jumio considers the significance for businesses and the development of the technology.

Wells welcomes the latest announcement: “President Biden’s executive order on AI is a timely and critical step, as AI-enabled fraud becomes increasingly harder to detect.”

In terms of the risks from fraud, Wells finds: “This poses a serious threat to individuals and organizations alike, as fraudsters can use AI to deceive people into revealing sensitive information or taking actions that could harm them financially or otherwise.”

It is also important, contends Wells, that businesses take steps to redress the risks posed by AI to their security: “In light of this growing threat, organizations must elevate the protection of their users. This can be accomplished by developing and implementing standards and best practices for detecting AI-generated content and authenticating official content and user identities.”

In terms of how businesses can deal with these problems, Wells proposes that this can be “done through tactics such as deploying biometrics-based authentication methods, including fingerprint or facial recognition, and conducting continuous content authenticity checks.”

Given the rapid pace of AI, Wells sees the need for businesses to put plans in motion as something that is very pressing: “Organizations must act now to protect their users from increasingly sophisticated AI-enabled fraud and deception methods. Enhancing identity verification tactics is essential to mitigate this risk.”

However, with the summit and the executive order, have politicians left things too late? If politicians continue to seek to regulate AI this slowly there are risks in states missing out on the chances to prevent potential hazards and dangerous misuses of the technology.

AI summits and executive orders: Are politicians leaving it too late?

#summits #executive #orders #politicians #leaving #late